Introduction

As I mentioned in previous post. I enrolled to Linux as server course immediatly when I started in Haaga-Helia. It was a excellent choice and now I am continuing my Linux studies with Linux project course. In this course everybody is free to choose own project. It has to be something which solves some kind of problem but basicly you can do what ever kind of project you like. This is my project on Tero Tuoriniemi`s Linux project course.

What would you do if something bad happens to your server when you are in the middle of nowhere without connection to Internet? How cool it would be if you could manage that situation just by sending sms to your server and reboot or get some information and try to solve the problem othervise? I would say it would be atleast pretty cool!

I am going to show how to actually do it with these tools:

- Debian

- Apache + php

- Twilio.com account + number

- iPhone

- Mail server for sending email

- API-key from ilmatieteenlaitos for wheather data

Table of contents:

Introduction

Starting pointExamplesPhp script for controlling Linux via sms (this goes to your server)How the script worksScript done, then what?Setting up the twilio.com for sms-controlWhat next?Conclusion

Starting point

I have Debian running on Linodes virtual private server. Apache and php are configured and tested (I like to use this Digital Oceans tutorial for it).

You will also need to have account in Twilio.com. It is service which provides “sms-tools”. Basicly you can buy a number and send the sms-messages to it. Then the Twilio passes the message sent to the number to somewhere. In this case “somewhere” is our Debian-server.

Examples

Using my service is super easy. For security reasons I made it to work only from messages sent from my personal number. So it is not available for public use. If you like you can build it for yourself with this guide.

When I like to reboot my system I do following:

SMS-message:

boot

Send to number:

+12345678 (this is not the real number, but similar)

After message is sent, server reboot process starts in less than 2 seconds and I got this sms-message as respond:

The system is going down for reboot NOW! :)

Sometimes I like to get uptime of my server and I do this:

SMS-message:

exec uptime

Send to number:

+12345678 (this is not the real number, but similar)

In few seconds I get something like this as response:

19:30:44 up 1 day, 6:08, 0 users, load average: 0.01, 0.02, 0.05

Few days ago I was so lazy that I did not want to get off from bed just to watch temperature from thermal meter so I asked it from my server:

SMS-message:

ilma helsinki

Send to number:

+12345678 (this is not the real number, but similar)

In few seconds I get something like this as response:

Bunny friendly temperature: 1.46 helsinki

Next I will show you how to make this all happen! 🙂

Php script for controlling Linux via sms (this goes to your server)

When you send sms-message to the Twilios number, Twilio receives it as plain a text and then redirects it to an URL specified in your Twilios account settings. In my case I have this URL in the settings: http://myserverip/control-sms.php. This script is the thing which actually makes the magic happen e.g. reboot. It checks the message redirected from Twilio and makes things depended on the content of the message. Finally it will combile xml-data and Twilio will read that and send the content back to the original message sender. For example if your message is requesting reboot, server will response: “The system is going down for reboot NOW! :)” and you get that kind of message via sms.

Full script:

[php]<?php

//first 5 characters of the message are dedicated to declare the purpose (mail, ilma or exec)

$action = substr($_REQUEST[‘Body’],0,4);

//rest of the message is an actual content

$message = substr($_REQUEST[‘Body’],5,150);

//get senders number

$number = $_REQUEST[‘From’];

$allowedNumber = +358400630148;

//Check the senders number

if($number != $allowedNumber){

exit();

}

if(strpos($message, “@”) !== false && $action == “mail”){

//To reach this point message have to be like: mail someone@example.com message-you-would-like-to-send

$spacePos = strpos($message, ” “);

$mailTo = substr($message,0,$spacePos);

$message = substr($message,$spacePos,100);

shell_exec(‘echo ‘.$message.’ | mail -s SMS-mail -a “From: put.your-own-email-here@example.com” ‘.$mailTo.”);

$result = “mail sent”;

}else if($action == “ilma”){

//To reach this point message have to be like: ilma helsinki (only works with Finnish cities/places)

date_default_timezone_set(‘Europe/Helsinki’);

$pvm = date(‘Y-m-d’);

$time = date(‘H:00:00’);

$location = substr($_REQUEST[‘Body’],5,40);

$dom = new DomDocument();

//Takes data from ilmatieteenlaitos open-data

//For this to work please add your api-key. You can get it for free from https://ilmatieteenlaitos.fi/rekisteroityminen-avoimen-datan-kayttajaksi

$dom->loadXML(file_get_contents(“http://data.fmi.fi/fmi-apikey/set-yout-own-api-key-here/wfs?request=getFeature&storedquery_id=fmi::forecast::hirlam::surface::point::timevaluepair&place=”.$location.”¶meters=temperature&starttime=”.$pvm.”T”.$time.”Z&endtime=”.$pvm.”T”.$time.”Z”));

$tempSource = $dom->getElementsByTagNameNS(“http://www.opengis.net/wfs/2.0”, “member”);

foreach ($tempSource as $m) {

$point = $m->getElementsByTagNameNS(“http://www.opengis.net/waterml/2.0”, “point”);

foreach ($point as $p) {

$tempTime = $p->getElementsByTagName(“time”)->item(0)->nodeValue;

$temperature = $p->getElementsByTagName(“value”)->item(0)->nodeValue;

}

$result = “Bunny friendly temperature: “.$temperature.” “.$location;

}

}else if($action == “exec”){

//To reach this point message have to be like: exec ls

$result = shell_exec($message);

}else if($action == “boot”){

//To reach this point message have to be like: exec ls

shell_exec(‘sudo /home/tuukka/reboot.sh’);

$result = “The system is going down for reboot NOW! :)”;

}else{

$result = “command not found”;

}

//Compiles xml data for Twilio. This is actually what clickatel is looking for.

header(“content-type: text/xml”);

echo “<?xml version=\”1.0\” encoding=\”UTF-8\”?>\n”;

?>

<Response>

<Message><?php echo $result ?></Message>

</Response>

[/php]

How the script works

(If you do not care about how it works or you are just too curious to test it immediately you can skip this part, but please note that to use wheather you need an api-key from ilmatieteenlaitos.)

In this script there are four different types of tasks which can be done: send mail, get weather temperature of specific location, execute command on shell or reboot system. If none of these happend script will return string: “Command not found”. I am going to explain how these different tasks work. But…

First of all the script gathers basic information:

[php]//first 5 characters of the message are dedicated to declare the purpose (mail, ilma or exec)

$action = substr($_REQUEST[‘Body’],0,4);

//rest of the message is an actual content

$message = substr($_REQUEST[‘Body’],5,150);

//get senders number

$number = $_REQUEST[‘From’];

$allowedNumber = +358400630148;

//Check the senders number

if($number != $allowedNumber){

exit();

}[/php]

It takes four first letters from message and sets them to $action variable which will be used to route actions(which task to do). Then it puts rest of the message to $message variable. This is the actual “command” which will be executed. After that it takes the number where message was sent and sets it to $number variable. Finally it will check that $allowedNumber and $number are the same. If not, script will be terminated and nothing happens. This last step is there to make sure that no one else can not for example reboot your system. So the only way to control the server is send message from number which is specified as $allowedNumber.

At this point we have two important variables: $action and $message.

§action defines what user wants to be done (which one of those four tasks)

$message defines what kind of information user gave (content of the email, location info for weather or command to be executed)

1. Task: sending mail

[php] if(strpos($message, “@”) !== false && $action == “mail”){

//To reach this point message have to be like: mail someone@example.com message-you-would-like-to-send

$spacePos = strpos($message, ” “);

$mailTo = substr($message,0,$spacePos);

$message = substr($message,$spacePos,100);

shell_exec(‘echo ‘.$message.’ | mail -s SMS-mail -a “From: put-your-own-email-here@example.com” ‘.$mailTo.”);

$result = “mail sent”;

}[/php]

If $message contains @-character and $action = ilma script this task will be executed.

Then the script looks for first space and sets string before it to $mailTo variable and uses it as email address where to send the mail. Rest of the $message is the actual content of the mail. Finally the script will set “mail sent” string to $result variable. This is the string which is goig to be response for original sms-message.

2. Task: check weather

[php]else if($action == “ilma”){

//To reach this point message have to be like: ilma helsinki (only works with Finnish cities/places)

date_default_timezone_set(‘Europe/Helsinki’);

$pvm = date(‘Y-m-d’);

$time = date(‘H:00:00’);

$location = substr($_REQUEST[‘Body’],5,40);

$dom = new DomDocument();

//Takes data from ilmatieteenlaitos open-data

//For this to work please add your api-key. You can get it for free from https://ilmatieteenlaitos.fi/rekisteroityminen-avoimen-datan-kayttajaksi

$dom->loadXML(file_get_contents(“http://data.fmi.fi/fmi-apikey/set-your-own-api-key-here/wfs?request=getFeature&storedquery_id=fmi::forecast::hirlam::surface::point::timevaluepair&place=”.$location.”¶meters=temperature&starttime=”.$pvm.”T”.$time.”Z&endtime=”.$pvm.”T”.$time.”Z”));

$tempSource = $dom->getElementsByTagNameNS(“http://www.opengis.net/wfs/2.0”, “member”);

foreach ($tempSource as $m) {

$point = $m->getElementsByTagNameNS(“http://www.opengis.net/waterml/2.0”, “point”);

foreach ($point as $p) {

$tempTime = $p->getElementsByTagName(“time”)->item(0)->nodeValue;

$temperature = $p->getElementsByTagName(“value”)->item(0)->nodeValue;

}

$result = “Bunny friendly temperature: “.$temperature.” “.$location;

}

}[/php]

If $action is string ilma this task will be executed. First it sets timezone, gets current date and time and gets $location from the sms-message. Then it loads XML data from ilmatieteenlaitos which provides all findings as open data. For getting the temperature it uses current date and time stored in $pvm and $time variables. Finally it sets temperature and location to $result variable which will be sent back to sms-sender.

3. Task: execute command in shell

[php]else if($action == “exec”){

//To reach this point message have to be like: exec ls

$result = shell_exec($message);

}[/php]

If $action is a string exec this task will be executed. As you can see this is very simple. $message will be executed in shell and response (response for ls command for example is list of files in directory) will be set to $result variable and sent to original sms-sender.

4. Task: reboot system

[php]else if($action == “boot”){

shell_exec(‘sudo /home/tuukka/reboot.sh’);

$result = “The system is going down for reboot NOW! :)”;

}[/php]

If $action is a string boot this task will be executed. This task is little bit tricky. In Debian and Ubuntu system is easy to reboot with command: reboot but it requires sudo. Php:s built in shell_exec method does not have sudo privilleges by default. I have solved the problem by making bash script reboot.sh and in sudoers file I have specified that www-data has privilleges to sudo that file.

reboot.sh (in my system it is in path /home/tuukka/reboot.sh):

[raw]#!/bin/sh

reboot[/raw]

In /etc/sudoers I have added this line:

[ini]www-data ALL=NOPASSWD: /home/tuukka/reboot.sh[/ini]

Script done, then what?

Simply, put this script under your public_html or whatever folder which is accesible via Internet. In my case script is in /var/www/sms-control.php .

Now I make a promise. The following step will be the last!

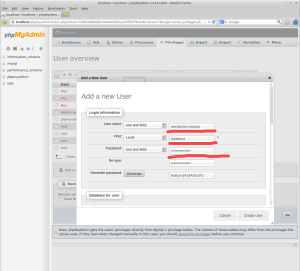

Setting up the twilio.com for sms-control

Head to twilio.com and create a new account. After that you will need to enter your credit card details and load some money to your account. After that click Numbers link and Buy a number

Next set search method to sms and click search

Then choose any number from the list and click Buy

Then you have to confirm your purchase

After that head back to Numbers and click on the number you just purchased

Then scroll down and set url which goes to your sms-control.php script

Good news: all done and everything should work! Try to send sms to your number which you just purchased.

What next?

Well I have actually did something already. Would it be super cool to get information about your home via sms. I think it would and I have started by measuring temperature. I made my Raspberry Pi to measure the temperature, store it to a database and upgraded the sms-control.php to check current temperature from the database. I am going to explain this task in part 2.

Next thing what I would like to do is build surveillance camera from Raspberry Pi and get photos via sms (hopefully this means there will be part 3:).

Conclusion

To get this to work you will need to have:

- Linux server with apache and php

- Account in the Twilio.com

- Number purchased from the Twilio.com

- Php-script in your linux server (and URL to it)

- If you use mine you will need to:

- URL set in the Twilio.com numbers settings

More information: https://www.twilio.com/docs

sms-control.php on GitHub: https://github.com/RakField/control-linux-sms

This post is made in cooperation with Haaga-Helia University of Applied Sciences.

This blog is dedicated to topics which are somehow related to Information and communications technology. Especially GNU/Linux, security, web development and other IT-related news and ideas are in spotlight. Above all I am going to publish things that gets my attention!

This blog is dedicated to topics which are somehow related to Information and communications technology. Especially GNU/Linux, security, web development and other IT-related news and ideas are in spotlight. Above all I am going to publish things that gets my attention!